The PostgreSQL Global Development Group released the first beta version of PostgreSQL 18 on May 8, 2025, with the official version launching on September 25. In this article, the IvorySQL community breaks down six highlight features of PostgreSQL 18.

1. PG Asynchronous I/O (AIO) Framework: The First Step Toward Breaking the Synchronous Blocking Bottleneck

PostgreSQL 18 introduces a全新的 asynchronous I/O subsystem. This new mechanism allows parallel execution of multiple asynchronous read-ahead operations in specific scenarios, enabling the CPU to continue processing queries without waiting for data returns, thereby reducing wait overhead. This framework lays the groundwork for deeper and more comprehensive asynchronous I/O performance optimizations in the future.

Key Performance Improvements

[Currently, only asynchronous reads are implemented; asynchronous writes are not yet supported.]

- All sequential scan scenarios can achieve parallelized sequential read-ahead using the adapted

ReadStreamfacility for asynchronous I/O, improving sequential scan performance beyond the previous advisory read-ahead viaposix_fadvise. - In cloud storage scenarios, where single-blocking read I/O takes longer than local I/O, parallelized read-ahead with asynchronous I/O shows even greater advantages.

- Asynchronous I/O currently supports asynchronous reads for sequential scans, bitmap heap scans, and VACUUM operations. Early tests show a 2-3x performance improvement for read-intensive queries.

The diagram illustrates how the ReadStream mechanism with asynchronous I/O can asynchronously pre-read buffers that may be used later. In contrast, synchronous I/O requires waiting for each I/O operation to complete before proceeding, reducing system throughput.

Configuration

# - I/O -

#backend_flush_after = 0 # measured in pages, 0 disables

effective_io_concurrency = 300 # 1-1000; 0 disables issuing multiple simultaneous IO requests

maintenance_io_concurrency = 300 # 1-1000; same as effective_io_concurrency

io_max_combine_limit = 256kB # usually 1-128 blocks (depends on OS)

# (change requires restart)

io_combine_limit = 256kB # usually 1-128 blocks (depends on OS)

io_method = io_uring # worker, io_uring, sync

# (change requires restart)

io_max_concurrency = 128 # Max number of IOs that one process

# can execute simultaneously

# -1 sets based on shared_buffers

# (change requires restart)

#io_workers = 3 # 1-32;

Users can choose from three io_method options to enable asynchronous I/O:

worker: Background I/O workers handle I/O requests from backend processes.io_uring: On Linux systems, the io_uring subsystem uses kernel threads to process PostgreSQL I/O requests.sync: Synchronous I/O that meets the asynchronous I/O framework interface requirements.

To enable asynchronous I/O, users must set the above GUC parameters appropriately. The maximum number of asynchronous I/O handles per process is io_max_concurrency. Setting it to -1 lets the database auto-select a value. If the value is too large, it may prevent database startup due to excessive memory allocation; if too small, it may not fully utilize asynchronous I/O performance.

After starting the database, users can monitor asynchronous I/O execution in real-time via the pg_aios view:

postgres=# SELECT * FROM pg_aios;

-[ RECORD 1 ]---+-------------------------------------------

pid | 85834

io_id | 14208

io_generation | 204

state | SUBMITTED

operation | readv

off | 116252672

length | 8192

target | smgr

handle_data_len | 1

raw_result |

result | UNKNOWN

target_desc | block 14191 in file "base/5/16427"

f_sync | f

f_localmem | f

f_buffered | t

...

See the official documentation for detailed column descriptions.

Framework Design

PostgreSQL 18 introduces an asynchronous I/O framework configurable via GUC parameters, including implementation method (io_method: worker, io_uring, or sync), concurrency scale (e.g., *_io_concurrency, io_max_concurrency), and implementation-specific parameters (e.g., io_workers). The framework abstracts I/O targets (currently supporting smgr; WAL support planned) and behaviors at different stages and from different data sources (shared buffer/local buffer) via the PgAioHandleCallbacks structure to support future extensions. Related memory is allocated in shared memory at startup and remains fixed. Processes access asynchronous I/O resources by number, with handles reused via generation numbers.

Currently, this version supports asynchronous reads for smgr but not asynchronous WAL reads or writes. Asynchronous smgr writes are still under development.

Modifications to Existing Facilities

- Extended smgr interface: Added

smgr_startreadvmethod to support asynchronous reads. - Implemented callback structures: smgr must implement

PgAioTargetInfoandPgAioHandleCallBackscallback structures. - Adapted existing modules: smgr, buffer manager, and other modules must populate asynchronous I/O abstraction structures for framework compatibility.

- Critical section handling: Synchronous I/O can be initiated within PostgreSQL critical sections; asynchronous I/O, due to its segmented execution and callbacks, must avoid operations that might fail in callbacks (e.g., using

RelPathStrinstead of palloc’schar*strings) to ensure safety. - Optimized high-level interfaces: Modified interfaces like

ReadStreamusing asynchronous I/O to achieve true read-ahead, significantly improving I/O performance for sequential scans,pg_prewarm, and ANALYZE operations, outperforming the previousposix_fadviseapproach.

Usage Notes and Future Outlook

- io_uring requires a newer kernel: Older Linux kernels do not support io_uring. Early versions may support it but with limited functionality and performance compared to newer kernels.

- Architectural concurrency limitations: Due to the multi-process architecture, PostgreSQL has limited parallel computational tasks during asynchronous I/O, making fine-grained task-level asynchrony difficult. Current performance gains are mainly in

ReadStreamsequential read-ahead and parallel I/O operations. - Future performance improvements: Linux io_uring supports Direct I/O (DIO), laying the foundation for enabling DIO in PostgreSQL. Future DIO adoption could eliminate double buffering (OS-level I/O data buffering and PostgreSQL-level I/O buffering) to reduce unnecessary data copying. On high-speed NVMe devices, combining DIO with the

IORING_SETUP_IOPOLLoption for polling I/O completion could further enhance performance. - More asynchronous I/O backends: Besides sync mode, the official PostgreSQL 18 release supports only

workerandio_uringbackends. The asynchronous I/O framework is now robust, and future versions may support Windows IORing, IOCP, and Posix asynchronous I/O backends, offering users more choices.

Summary

PostgreSQL 18’s asynchronous I/O framework enhances the system’s I/O capabilities and improves architectural extensibility. Users can benefit from asynchronous I/O by adjusting GUC parameters according to their needs. The framework is now well-designed, making future support for other asynchronous I/O backends straightforward.

- Users on any platform can try

io_method = workerorsync. - For non-officially adapted io_uring on older Linux kernels, thorough testing is recommended before use.

2. Skip Scan: Accelerating B-Tree Indexes

In versions before PostgreSQL 18, multi-column B-tree indexes could be used for queries involving any subset of the index columns, with highest efficiency when constraints were applied to the leading (leftmost) columns. Equality constraints on leading columns, plus any inequality constraints on the first non-equality-constrained column, limited the scanned index portion. For example, given an ascending index on (a, b, c) with non-null fields and the query condition WHERE a = 5 AND b >= 42 AND c < 77, the index would scan from the first entry with a = 5 and b = 42 to the last entry with a = 5. Entries with c >= 77 would be skipped but still scanned. In principle, such an index could be used for queries constraining b and/or c but not a—but required a full index scan, so the optimizer often preferred a sequential table scan over an index scan.

Key Improvements

Starting with PostgreSQL 18: If a B-tree index scan can apply a skip scan, it reduces index reads by applying constraints for each column during traversal. Skip scan works by internally generating dynamic equality constraints that match each possible value in the index columns.

Performance Test Comparison

Versions: PostgreSQL 17 vs. PostgreSQL 18

Table Structure and Index

CREATE TABLE t1 (

c1 INT,

c2 INT,

c3 FLOAT

) WITH (fillfactor = 80);

CREATE INDEX idx_t1_c1c2 ON t1(c1, c2);

Data Generation

INSERT INTO t1

SELECT (random() * 1000)::INT, (random() * 10000)::INT, random()

FROM generate_series(1, 1000000) g;

Data Import via COPY

COPY t1 FROM '/.../t1.csv' WITH (FORMAT csv);

Query Statement Using the Second Column of the Composite Index

EXPLAIN ANALYZE SELECT * FROM t1 WHERE c2 = 100;

PostgreSQL 17 Execution Plan

Chooses a parallel sequential scan:

QUERY PLAN

-------------------------------------------------------------------------------------------------------------------

Gather (cost=1000.00..12986.33 rows=100 width=16) (actual time=1.125..76.076 rows=90 loops=1)

Workers Planned: 2

Workers Launched: 2

-> Parallel Seq Scan on t1 (cost=0.00..11976.33 rows=42 width=16) (actual time=1.414..68.624 rows=30 loops=3)

Filter: (c2 = 100)

Rows Removed by Filter: 333303

Planning Time: 0.792 ms

Execution Time: 76.165 ms

(8 rows)

Disabling sequential scan forces an index scan, resulting in a non-optimal, slower plan:

PostgreSQL 18 Execution Plan

Chooses an index scan, showing significant performance improvement from skip scan:

QUERY PLAN

---------------------------------------------------------------------------------------------------------------------------

Index Scan using idx_t1_c1c2 on t1 (cost=0.42..3900.84 rows=100 width=16) (actual time=0.225..11.464 rows=90.00 loops=1)

Index Cond: (c2 = 100)

Index Searches: 1002

Buffers: shared hit=3096

Planning Time: 0.141 ms

Execution Time: 11.522 ms

(6 rows)

Disabling index and bitmap scans forces a sequential scan:

postgres=# EXPLAIN ANALYZE SELECT * FROM t1 WHERE c2 = 100;

QUERY PLAN

----------------------------------------------------------------------------------------------------------------------

Gather (cost=1000.00..12986.33 rows=100 width=16) (actual time=1.486..86.881 rows=90.00 loops=1)

Workers Planned: 2

Workers Launched: 2

Buffers: shared hit=6768

-> Parallel Seq Scan on t1 (cost=0.00..11976.33 rows=42 width=16) (actual time=2.758..78.712 rows=30.00 loops=3)

Filter: (c2 = 100)

Rows Removed by Filter: 333303

Buffers: shared hit=6768

Planning Time: 0.141 ms

Execution Time: 86.926 ms

(10 rows)

Usage Notes

Skip scan currently supports only equality comparison conditions.

Summary

PostgreSQL 18’s index skip scan allows multi-column B-tree indexes to be used for queries that reference only the second or later columns with equality conditions, significantly reducing the number of index entries that need to be accessed and greatly improving efficiency.

3. Virtual Generated Columns: Flexible Balance Between Storage and Computation

PostgreSQL 18 introduces developer experience-related features focused on simplifying development workflows and enhancing code flexibility, allowing developers to leverage PostgreSQL’s capabilities more efficiently. The IvorySQL database has long focused on Oracle compatibility, including syntax compatibility for virtual columns:

column [datatype] [GENERATED ALWAYS] AS (column_expression) [VIRTUAL]

Now, PostgreSQL 18 finally brings virtual column functionality. Virtual columns are table columns that do not store data; their values are dynamically computed during queries. Compared to stored columns, virtual columns save storage space, and querying them computes the expression value as the column value.

Basic Syntax

In PostgreSQL 18, virtual column syntax is similar to stored column syntax, adding the keyword VIRTUAL. If both STORED and VIRTUAL are omitted, the default is virtual. The syntax is:

GENERATED ALWAYS AS (generation_expr) [ STORED | VIRTUAL ]

Virtual Column Example

Virtual columns are identified via column constraints. The following demonstrates creating, querying, and adding virtual columns:

-- Create a table with a virtual column, price_with_tax

CREATE TABLE products (

id SERIAL PRIMARY KEY,

name TEXT NOT NULL,

price NUMERIC(10,2) NOT NULL,

tax_rate NUMERIC(5,2) DEFAULT 0.20,

price_with_tax NUMERIC(10,2) GENERATED ALWAYS AS (price * (1 + tax_rate)) VIRTUAL

);

-- Insert data

INSERT INTO products (name, price, tax_rate)

VALUES ('Laptop', 1000.00, 0.20);

-- Query data (virtual column computed automatically)

SELECT name, price, tax_rate, price_with_tax FROM products;

name | price | tax_rate | price_with_tax

-------+---------+----------+----------------

Laptop | 1000.00 | 0.20 | 1200.00

(1 row)

-- Add a virtual column to the table

ALTER TABLE products ADD COLUMN selling_price NUMERIC(10,2)

GENERATED ALWAYS AS (

price * (1 - 0.2) * (1 + tax_rate)

) VIRTUAL;

Implementation OverviewVirtual Column Creation

Virtual columns are stored similarly to ordinary columns in the pg_attribute system table. The attgenerated column stores generated column information: 's' indicates a stored column. PostgreSQL 18’s virtual columns are identified by 'v' in this field, and the virtual column expression is stored in the pg_attrdef system table.

-- View virtual column information; attgenerated = 'v' indicates a virtual column

postgres=# SELECT * FROM pg_attribute WHERE attname = 'price_with_tax';

-[ RECORD 1 ]--+---------------

attrelid | 16388

attname | price_with_tax

atttypid | 1700

attlen | -1

attnum | 5

atttypmod | 655366

attndims | 0

attbyval | f

attalign | i

attstorage | m

attcompression |

attnotnull | f

atthasdef | t

atthasmissing | f

attidentity |

attgenerated | v

attisdropped | f

attislocal | t

attinhcount | 0

attcollation | 0

attstattarget |

attacl |

attoptions |

attfdwoptions |

attmissingval |

-- View stored virtual column expression

postgres=# SELECT pg_get_expr(adbin, adrelid) FROM pg_attrdef WHERE adnum = 5;

pg_get_expr

-------------------------------------

(price * ((1)::numeric + tax_rate))

(1 row)

Virtual Column Insertion or Update

Since virtual columns do not occupy storage space, any operation attempting to update or insert into a virtual column is restricted.

-- Attempt to insert into virtual column

INSERT INTO products (name, price, tax_rate, price_with_tax) VALUES ('Laptop', 1000.00, 0.20, 1);

ERROR: cannot insert a non-DEFAULT value into column "price_with_tax"

DETAIL: Column "price_with_tax" is a generated column.

-- Attempt to update virtual column

UPDATE products SET price_with_tax = 1 WHERE name = 'Laptop';

ERROR: column "price_with_tax" can only be updated to DEFAULT

DETAIL: Column "price_with_tax" is a generated column.

Virtual Column Query

In PostgreSQL 18, virtual column query implementation is completed during the execution plan generation phase. During logical rewrite optimization, if the query range table includes virtual columns, their expressions are retrieved from pg_attrdef and replace the original virtual column names. Thus, querying a virtual column value is equivalent to computing its expression—e.g., SELECT price_with_tax becomes SELECT (price * ('1'::numeric + tax_rate)) AS price_with_tax.

The following shows the virtual column being replaced by its expression:

postgres=# EXPLAIN VERBOSE SELECT name, price, tax_rate, price_with_tax

FROM products;

QUERY PLAN

----------------------------------------------------------------------

Seq Scan on public.products (cost=0.00..23.12 rows=750 width=76)

Output: name, price, tax_rate, (price * ('1'::numeric + tax_rate))

(2 rows)

Applicable Scenarios

- Use virtual columns to save disk space since they do not occupy storage.

- Use when column values need to change based on dependent columns, as virtual columns are dynamically fetched.

- Use when the virtual column expression is simple, as querying virtual columns consumes CPU resources; complex expressions may consume too much.

- Facilitates migration from Oracle, which has virtual column functionality, to PostgreSQL.

Pending Improvements

Some features are not yet supported but may be added in future versions:

- Creating indexes or using virtual columns in indexes.

- Unique constraints on virtual columns.

- Extended statistics on virtual columns.

- Foreign key constraints on virtual columns.

- NOT NULL constraints on virtual columns (check constraints are supported).

ALTER TABLE / DROP EXPRESSION.- Virtual columns cannot have domain types.

- Logical replication does not support virtual columns.

Summary

Virtual columns differ fundamentally from ordinary and stored columns because they do not occupy disk space, and their value retrieval differs: ordinary or stored columns fetch data from disk, while virtual columns compute values dynamically.

4. UUID Enhancements: Improved Orderliness and Usability

The randomness of traditional UUIDs (especially v4) is a major pain point when used as primary keys:

- Randomly generated UUIDs have uncertain insertion positions, causing frequent index tree splits and reorganization, significantly reducing write performance.

- They disrupt the physical storage order of clustered indexes (e.g., InnoDB), increasing disk I/O.

- Range queries and sorting are inefficient, leading to poor performance.

UUIDv7’s Key Breakthrough: Time-Ordered Architecture

UUIDv7 introduces timestamps in the high-order bits of the UUID to address the complete randomness of traditional UUID generation, ensuring newly generated UUIDs are naturally sorted by creation time. This allows B-tree indexes to perform sequential inserts like auto-incrementing integers while maintaining the global uniqueness and distributed generation advantages of UUIDs.

This gives UUIDv7 the following standout advantages as a primary key:

- Strictly increasing by creation time.

- Reduces index fragmentation.

- Improves cache hit rates.

- Suitable for high-concurrency inserts and efficient queries.

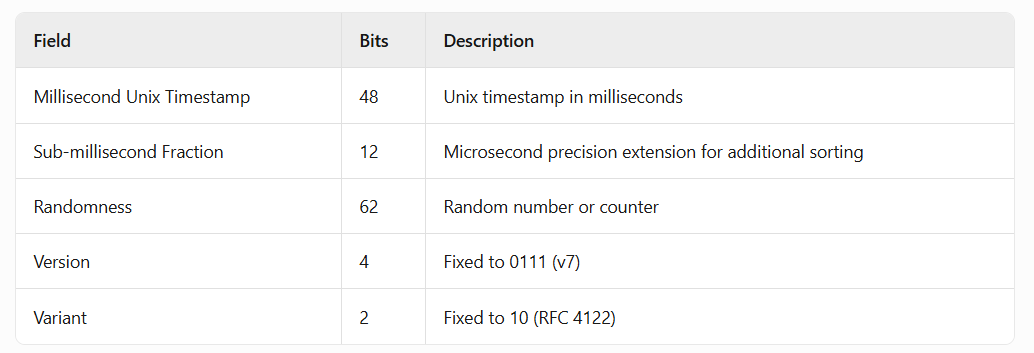

UUIDv7 Structure Design

Design Key Points

- High-precision time prefix (48 bits): Unix timestamp precise to milliseconds, ensuring strict time-based incrementality (requires NTP clock synchronization).

- Tail randomness (62 bits): Ensures distributed uniqueness, avoiding the MAC address leakage risk of v1.

How Orderliness Solves Performance Issues

- B-Tree index optimization: Newly generated UUIDv7 values are always greater than previous ones, so they are appended to the index tail, avoiding intermediate node splits.

- Buffer pool friendly: Sequential writing concentrates new records on few data pages. When a page is full, the database only needs to allocate a new page for appending, reducing old page eviction and disk I/O.

- Range query acceleration: Time order allows

WHERE id > ‘2025-06-01’to be converted to timestamp range filtering, greatly reducing scan range.

Using UUIDv7 in PostgreSQL 18

PostgreSQL 18 introduces several new functions to support UUIDv7 for generation, manipulation, and extraction.

uuidv7() Function: Generates new UUIDv7 values

-- Generate UUIDv7 with current timestamp

SELECT uuidv7();

-- Example output: 0197f96c-b278-7f64-a32f-dae3cabe1ff0

-- Generate UUIDv7 for 1 hour ago

SELECT uuidv7(INTERVAL '-1 hour');

-- Generate UUIDv7 for 30 minutes from now

SELECT uuidv7(INTERVAL '30 minutes');

uuidv4() Function: Alias for the existing gen_random_uuid() function, for use alongside uuidv7

-- Both equivalent

SELECT gen_random_uuid();

SELECT uuidv4();

uuid_extract_timestamp() Function: Now supports UUIDv7 (previously only UUIDv1)

-- Extract timestamp from UUIDv7

SELECT uuid_extract_timestamp(uuidv7());

-- Example output: 2025-09-18 12:20:49.409+00

uuid_extract_version() Function: Detects UUID version

-- Check UUID version

SELECT uuid_extract_version(uuidv7()); -- Returns 7

SELECT uuid_extract_version(uuidv4()); -- Returns 4

Using UUIDv7 as Primary Key in PostgreSQL

-- Create table with UUIDv7 primary key

CREATE TABLE users (

id UUID PRIMARY KEY DEFAULT uuidv7(),

username VARCHAR(50) UNIQUE NOT NULL,

email VARCHAR(100) NOT NULL,

created_at TIMESTAMP WITH TIME ZONE DEFAULT CURRENT_TIMESTAMP

);

This automatically assigns a timestamp-ordered UUID to each new record’s id.

-- Insert data

INSERT INTO users (username, email)

VALUES ('alice', 'alice@example.com');

INSERT INTO users (username, email)

VALUES ('bob', 'bob@example.com');

-- View by UUID time order

SELECT id, username, uuid_extract_timestamp(id) AS uuid_timestamp

FROM users

ORDER BY id;

Performance Advantages: UUIDv7’s timestamp order significantly reduces page splits and cache invalidation, improving B-tree index efficiency.

-- Create performance test table

CREATE TABLE performance_test (

id_v4 UUID DEFAULT uuidv4(),

id_v7 UUID DEFAULT uuidv7(),

data TEXT DEFAULT 'sample data'

);

-- Create indexes using UUIDv7

CREATE INDEX idx_v4 ON performance_test (id_v4);

CREATE INDEX idx_v7 ON performance_test (id_v7);

After bulk insertion, check index hit rates with pg_statio_user_indexes; UUIDv7 typically performs better.

UUIDv7’s inherent time ordering improves sorting performance in most scenarios.

-- Leverage natural ordering of UUIDv7

SELECT id_v7, data

FROM performance_test

ORDER BY id_v7

LIMIT 10;

Compared to UUIDv4’s random order, UUIDv7 query results are returned in creation order, which is more intuitive.

UUIDv7 Best Practices

Suitable Scenarios for UUIDv7:

- Multi-tenant applications: Use UUIDv7 as primary key and create composite indexes on

(tenant_id, id)for uniqueness and time ordering. - Distributed systems: Multiple services can independently generate UUIDv7 while maintaining global time order.

Limitations and Considerations

- Relies on system clock: Requires time synchronization mechanisms like NTP to prevent clock drift.

- Timestamp precision: UUIDv7 uses milliseconds; multiple UUIDs generated within the same millisecond may not reflect exact creation order but remain unique.

- Migration planning: When migrating from UUIDv4 to UUIDv7, check application logic, indexes, and external dependencies.

Summary

PostgreSQL 18’s support for UUIDv7 addresses the performance bottlenecks of UUIDs as primary keys. UUIDv7 combines global uniqueness with sequentiality similar to auto-incrementing integers, making B-tree inserts more efficient and queries faster. For modern applications requiring distribution, high concurrency, and high performance, UUIDv7 offers a practical solution balancing uniqueness and performance.

5. EXPLAIN Enhancements: Intuitive Execution Details

PostgreSQL 18 significantly upgrades the EXPLAIN command, providing richer, more intuitive execution plan information to make query performance analysis and optimization easier for database developers and DBAs.

Automatic Buffer AnalysisEXPLAIN ANALYZE now includes BUFFER statistics by default, without manually adding the BUFFERS option:

- Shared Hits: Number of data blocks read from cache, reflecting memory usage efficiency.

- Shared Reads: Number of data blocks that must be read from disk, helping identify I/O bottlenecks.

- Shared Dirtied: Number of blocks modified by data change operations.

Fine-Grained Index Monitoring

New index scan count statistics let developers precisely understand index usage efficiency:

-- Example output showing index usage

Index Scan using orders_pkey on orders

Index Searches: 1 -- Clearly shows number of index lookups

Buffers: shared hit=2 read=2

Enhanced Statistics

- Support fractional row counts for more precise row estimates.

- Output memory and disk usage details for Material, Window Aggregate, and CTE nodes.

- Display detailed parameter information in window functions.

- Show worker buffer statistics for Parallel Bitmap Heap Scan.

- Output disabled nodes.

- Output WAL buffer information.

Basic Query Analysis

-- Create test table

CREATE TABLE orders (

order_id SERIAL PRIMARY KEY,

customer_id INTEGER NOT NULL,

order_date DATE NOT NULL,

total_amount DECIMAL(10, 2) NOT NULL

);

CREATE INDEX idx_orders_customer_id ON orders(customer_id);

-- Insert test data

INSERT INTO orders (customer_id, order_date, total_amount)

SELECT

(n % 10) + 1,

CURRENT_DATE - (n % 365),

(50 + (random() * 950))::decimal(10,2)

FROM generate_series(1, 50000) n;

-- View enhanced execution plan

EXPLAIN ANALYZE

SELECT *, sum(total_amount) OVER (PARTITION BY customer_id)

FROM orders WHERE order_id > 49900;

Execution Plan:

QUERY PLAN

----------------------------------------------------------------------------------------------------------------------------------------

WindowAgg (cost=13.46..15.04 rows=99 width=50) (actual time=0.630..0.745 rows=100.00 loops=1)

Window: w1 AS (PARTITION BY customer_id)

Storage: Memory Maximum Storage: 17kB

Buffers: shared hit=5 read=2

-> Sort (cost=13.30..13.55 rows=99 width=18) (actual time=0.307..0.333 rows=100.00 loops=1)

Sort Key: customer_id

Sort Method: quicksort Memory: 28kB

Buffers: shared hit=5 read=2

-> Index Scan using orders_pkey on orders (cost=0.29..10.02 rows=99 width=18) (actual time=0.089..0.174 rows=100.00 loops=1)

Index Cond: (order_id > 49900)

Index Searches: 1

Buffers: shared hit=2 read=2

Planning:

Buffers: shared hit=64 read=22

Planning Time: 2.080 ms

Execution Time: 1.343 ms

Execution Plan Insights:

- Buffer usage (cache hits vs. disk reads):

- Planning phase: 64 shared buffer hits, 22 disk reads.

- Execution: 5 shared buffer hits, 2 disk reads.

- Index efficiency statistics: 1 orders_pkey index search using 2 shared buffers.

- Window function memory details: 17kB disk space used.

- Precise row statistics.

- Detailed window function parameters.

WAL Log Analysis

EXPLAIN (ANALYZE, WAL)

INSERT INTO orders (customer_id, order_date, total_amount)

SELECT

(n % 10) + 1,

CURRENT_DATE - (n % 365),

(50 + (random() * 950))::decimal(10,2)

FROM generate_series(1, 50000) n;

Execution Plan:

QUERY PLAN

---------------------------------------------------------------------------------------------------------------------------------------------

Insert on orders (cost=0.00..2000.00 rows=0 width=0) (actual time=767.116..767.118 rows=0.00 loops=1)

Buffers: shared hit=299156 read=2 dirtied=500 written=501

WAL: records=152158 bytes=10427828 buffers full=139

-> Subquery Scan on "*SELECT*" (cost=0.00..2000.00 rows=50000 width=28) (actual time=5.742..336.699 rows=50000.00 loops=1)

Buffers: shared hit=50013

WAL: records=1516 bytes=150084 buffers full=2

-> Function Scan on generate_series n (cost=0.00..1750.00 rows=50000 width=24) (actual time=5.460..227.650 rows=50000.00 loops=1)

Planning Time: 0.114 ms

Execution Time: 767.179 ms

WAL Statistics:

- Monitor log generation for write loads: 1516 WAL records, 150084 bytes.

- Diagnose write bottlenecks: Buffers filled completely twice.

Parallel Query Optimization

EXPLAIN (ANALYZE)

SELECT * FROM orders WHERE customer_id IN (1, 2, 3, 4, 5, 6);

Execution Plan:

QUERY PLAN

-------------------------------------------------------------------------------------------------------------------------------------------------------

Gather (cost=2752.40..10357.99 rows=327855 width=18) (actual time=22.375..121.296 rows=330000.00 loops=1)

Workers Planned: 2

Workers Launched: 2

Buffers: shared hit=3810

-> Parallel Bitmap Heap Scan on orders (cost=2751.40..10029.13 rows=136606 width=18) (actual time=12.868..88.329 rows=110000.00 loops=3)

Recheck Cond: (customer_id = ANY ('{1,2,3,4,5,6}'::integer[]))

Rows Removed by Index Recheck: 53967

Heap Blocks: exact=170 lossy=566

Buffers: shared hit=3810

Worker 0: Heap Blocks: exact=387 lossy=957

Worker 1: Heap Blocks: exact=369 lossy=1055

-> Bitmap Index Scan on idx_orders_customer_id (cost=0.00..2669.44 rows=327855 width=0) (actual time=21.219..21.220 rows=330000.00 loops=1)

Index Cond: (customer_id = ANY ('{1,2,3,4,5,6}'::integer[]))

Index Searches: 1

Buffers: shared hit=266

Planning:

Buffers: shared hit=30

Planning Time: 0.510 ms

Execution Time: 158.523 ms

Parallel Execution Insights:

- Per-worker buffer details:

- Worker 0: 387 exact blocks, 957 lossy blocks.

- Worker 1: 369 exact blocks, 1055 lossy blocks.

- Exact vs. lossy block analysis: Lossy blocks indicate potentially insufficient work_mem for precise tuple location.

Technical Advantages and Value

- Immediate performance diagnostics:

- Lower barrier: Automated buffer stats help beginners quickly identify I/O issues.

- Deep insights: Provide expert users with granular performance data.

- Comprehensive coverage: Single command reveals execution plans, cache usage, index efficiency, and more.

- Optimization guidance:

- Index optimization: Precise index usage statistics prevent over- or under-indexing.

- Memory tuning: work_mem adjustment guidance based on lossy block frequency.

- Query rewriting: Optimize SQL structures using detailed execution cost data.

Future Outlook

While PostgreSQL 18's EXPLAIN enhancements bring significant improvements, some areas could be further refined:

- Output readability: Increased information complexity may require better formatting or visualization tools.

- Historical comparison: Built-in mechanisms for comparing with historical execution plans are lacking, still relying on external tools for performance regression analysis.

- Threshold alerts: No built-in mechanism to warn of anomalies (e.g., unusually high buffer reads), requiring manual plan analysis.

- Visualization: Text-based output remains difficult to interpret intuitively for complex queries, needing third-party tool support.

Summary

PostgreSQL 18's EXPLAIN enhancements represent a major advance in database observability. By automating the collection of key performance metrics and providing deeper execution insights, they significantly lower the barrier to query optimization while giving experienced DBAs more powerful analytical capabilities.

6. OAuth 2.0 Authentication Support: Strengthening Data Protection Barriers

In terms of security, IvorySQL is committed to incorporating various national cryptographic authentication functions to ensure data security. PostgreSQL 18 continues to strengthen identity authentication by introducing support for OAuth 2.0—an open standard authorization protocol that allows an application or service to access a user's resources in another application without providing a username and password.

This feature primarily includes the following core elements:

- OAuth2 validator framework: Provides an extensible framework enabling PostgreSQL to integrate with OAuth 2.0 providers. PostgreSQL itself does not implement specific token verification algorithms (e.g., JWT validation); instead, it delegates this work to an external shared library (*.so file).

- Client authentication support: libpq (PostgreSQL's C client library) now supports the OAuth 2.0 authentication flow.

- Custom validation logic: Allows custom token verification and user mapping logic through a callback mechanism.

Configuring OAuth Authentication

Server Configuration

Selecting OAuth authentication is similar to choosing national cryptographic authentication in HighGo databases—by specifying METHOD as oauth in the pg_hba.conf file to enable OAuth authentication. The OPTIONS must specify issuer and scope parameters, with several optional parameters: validator, map, delegate_ident_mapping. Here is a minimal configuration example:

local all test oauth issuer="http://127.0.0.1:9000" scope="openid postgre"

Specify the external OAuth validator by configuring the newly provided oauth_validator_libraries parameter in the postgresql.conf file, with the content being the library file provided by the OAuth validator.

Client Configuration

Clients must specify the following connection parameters when connecting:

- oauth_issuer: Required, HTTPS URL, the issuer identifier of the authorization server.

- oauth_client_id: Required, OAuth 2.0 client identifier issued by the authorization server.

- oauth_client_secret: Optional, client secret to use when accessing the OAuth authorization server.

- oauth_scope: Optional, scope of access request sent to the authorization server, specified as a space-separated list of OAuth scope identifiers.

Authentication Implementation Principles

The overall OAuth authentication flow is roughly as follows:

Client (libpq)

PostgreSQL implements a non-blocking, state-machine-based asynchronous network client. The state machine includes states like OAUTH_STEP_INIT, OAUTH_STEP_DISCOVERY, OAUTH_STEP_DEVICE_AUTHORIZATION, OAUTH_STEP_TOKEN_REQUEST, and OAUTH_STEP_WAIT_INTERVAL. Its core principles include:

- DISCOVERY: Client retrieves authorization server metadata from user requests.

- DEVICE_AUTHORIZATION: Client sends a request to the authorization server, which returns a device_code and verification_uri. The client outputs: "Visit [verification_uri] and enter the code: [device_code]", prompting user action.

- TOKEN_REQUEST and WAIT_INTERVAL: Poll the authorization server until the user completes authorization; the authorization server returns an access_token to the client.

- The obtained access_token is set in the connection object. libpq sends it as a password to the PostgreSQL server, where the server-side OAuth validator checks this token.

Server

The following PostgreSQL server-side processing of OAuth authentication flow is also implemented via a state machine but is much simpler than the client's, with only three states: OAUTH_STATE_INIT, OAUTH_STATE_ERROR, and OAUTH_STATE_FINISHED. Key steps:

- Parse the client's message, which follows RFC 7628 section 3.1.

- Extract the pure Bearer Token from the client message and verify its format (valid Base64 string).

- Pass the extracted token to the validator module for substantive verification.

- Verification success: State becomes

OAUTH_STATE_FINISHED, returnPG_SASL_EXCHANGE_SUCCESS, and proceed with connection establishment. - Verification failure: Generate a JSON error response compliant with RFC 7628 section 3.2.2, informing the client of the required scope and where to obtain the token. State becomes

OAUTH_STATE_ERROR, and returnPG_SASL_EXCHANGE_CONTINUE, waiting for the client to send the finalKVSEPto end the failed handshake.

- Verification success: State becomes

External Validator

External validors typically need to handle:

- Token verification: Can be done via online or local validation, at the validator's discretion.

- Online validation: The validator usually sends the token to the authorization server's dedicated Introspection Endpoint, which returns a JSON response indicating whether the token is valid.

- Local validation: The validator internally implements a verification process, locally checking the token's signature and validity period. Local validation benefits from quick response but cannot detect token revocations in real time.

- Identity mapping: After successful verification, the validator extracts the unique user identifier from the token and converts it to a database-understandable identity, i.e., a database user.

- Connection decision: If the token is valid and a corresponding database user mapping exists, a session connection is created for that user.

Pros and Cons Analysis

Advantages:

- OAuth2 provides modern, standardized authentication mechanisms, improving security.

- OAuth2 authentication avoids the security risks of password exposure during transmission in traditional password authentication.

- Simplifies database user management, supports unified identity policies and access control, improving management efficiency.

Disadvantages:

- More complex to implement than traditional password authentication, requiring additional configuration and maintenance, including OAuth2 provider setup and management, increasing operational complexity. For example, HighGoDB's national cryptographic authentication function ensures password security with very simple configuration.

- Relies on the availability and reliability of external OAuth providers; if the OAuth provider has issues, database access may be affected.

- Each connection may require additional network requests to verify tokens, potentially increasing connection establishment time, especially in high-concurrency scenarios.

Summary

PostgreSQL 18's introduction of OAuth2 support is an important security enhancement, allowing organizations to use modern authentication mechanisms to protect database access. Through a flexible validator framework and callback mechanism, PostgreSQL 18 can adapt to various OAuth2 deployment scenarios and business needs. Although OAuth2 authentication increases system complexity, it offers significant security advantages, especially in centralized identity management and single sign-on. For organizations looking to adopt modern security best practices, this is a new feature worth considering.

OAuth authentication does not conflict with existing authentication methods; when cumbersome configuration is undesirable, options like HighGoDBs national cryptographic authentication can still be chosen.

When implementing OAuth2 authentication, organizations should carefully evaluate their needs, existing infrastructure, and technical capabilities to ensure successful deployment and operation. Performance, availability, and compatibility should also be considered to provide the best user experience and system reliability.

Conclusion

PostgreSQL 18 achieves comprehensive upgrades in performance, functionality, and security through six core features:

- Asynchronous I/O breaks synchronous blocking bottlenecks, improving throughput in read-intensive scenarios.

- Skip scan enables efficient use of multi-column B-tree indexes for queries on non-leading columns.

- Virtual generated columns strike a flexible balance between storage and computation, optimizing the development experience.

- UUIDv7 addresses the performance pain points of traditional UUID randomness, combining uniqueness with sequentiality.

- EXPLAIN enhancements provide more intuitive and detailed execution insights for query optimization.

- OAuth 2.0 authentication strengthens modern security barriers for data protection.

These features not only meet current database needs for high performance, high concurrency, ease of development, and strong security but also lay a solid foundation for future cross-platform adaptation and functional expansion. They further consolidate PostgreSQL's leading position in the open-source database field, providing more powerful and flexible technical support for various application scenarios.

Original: IvorySQL Community blog

%20(2048%20x%201000%20%E5%83%8F%E7%B4%A0)%20(3).png)

%20(2048%20x%201000%20%E5%83%8F%E7%B4%A0)%20(2).png)